Introduction

As artificial intelligence (AI) continues to permeate industries worldwide, governments and regulatory bodies have introduced stringent legal frameworks to ensure ethical, transparent, and responsible AI deployment. Compliance officers specializing in AI regulations play a crucial role in helping organizations navigate these complex requirements. With the advent of the European Union’s AI Act and similar regulations emerging globally, compliance officers must possess a deep understanding of AI technologies, risk management, and legal frameworks to ensure organizational adherence. [

The Role of a Data Protection Officer (DPO) During a Data Breach

A Data Protection Officer (DPO) plays a crucial role in managing data breaches within an organization. Their responsibilities are multifaceted, involving technical, legal, and communication aspects. Here’s a detailed look at what a DPO does during a data breach: Incident Response The DPO is typically part of the incident response

![]()

Compliance Hub WikiCompliance Hub

](https://www.compliancehub.wiki/the-role-of-a-data-protection-officer-dpo-during-a-data-breach/)

The Role of AI Compliance Officers

AI compliance officers are responsible for ensuring that an organization’s AI systems align with evolving regulatory requirements. Their role encompasses several key responsibilities:

- Regulatory Awareness and Interpretation:

- Keeping up-to-date with AI laws and frameworks such as the EU AI Act, the U.S. AI Bill of Rights, and sector-specific guidelines.

- Translating legal requirements into actionable compliance strategies for internal teams.

- Risk Assessment and Management:

- Identifying potential legal, ethical, and operational risks associated with AI deployment.

- Developing risk mitigation strategies that align with legal obligations and industry best practices.

- Policy Development and Implementation:

- Creating internal AI governance policies that reflect regulatory expectations.

- Ensuring these policies are effectively implemented across different departments.

- Audit and Monitoring:

- Conducting regular compliance audits to assess adherence to AI regulations.

- Implementing monitoring systems to detect and address non-compliance proactively.

- Training and Awareness:

- Educating employees on the ethical and legal considerations of AI usage.

- Promoting a culture of compliance within the organization.

- Collaboration with Stakeholders:

- Liaising with data protection officers (DPOs), legal teams, and AI development teams.

- Engaging with regulators and industry bodies to align compliance efforts with emerging standards.

[

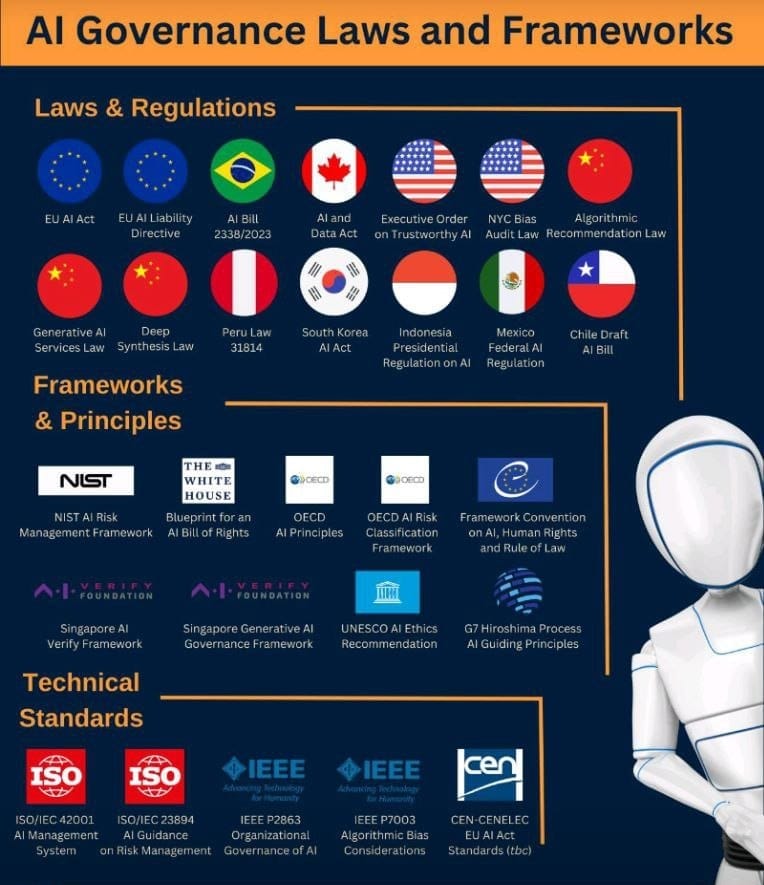

AI governance laws, frameworks, and technical standards from around the world

Navigating the Complex Landscape of AI Governance: A Global Overview As artificial intelligence (AI) continues to transform industries and societies, the need for robust governance frameworks has never been more critical. Across the globe, governments, international organizations, and standards bodies are introducing laws, frameworks, and technical standards to ensure AI

![]()

Compliance Hub WikiCompliance Hub

The EU AI Act and Its Impact

The European Union AI Act, set to become a global benchmark, categorizes AI systems based on risk levels:

- Unacceptable Risk: Banned applications such as social scoring and real-time biometric identification in public spaces.

- High Risk: Critical applications like healthcare and finance AI systems require stringent compliance measures, including transparency and accountability.

- Limited Risk: AI applications requiring transparency measures, such as informing users they are interacting with AI.

- Minimal Risk: Applications with negligible compliance requirements.

AI compliance officers must ensure their organizations’ AI systems fall within the permissible categories, conduct conformity assessments, and establish record-keeping practices to demonstrate compliance.

Challenges Faced by AI Compliance Officers

Despite their critical role, AI compliance officers encounter numerous challenges, including:

- Rapidly Evolving Regulations: Keeping pace with new laws and updates across different jurisdictions.

- Technical Complexity: Understanding the intricacies of AI algorithms and their potential biases.

- Cross-Border Compliance: Navigating varying AI regulations in multinational operations.

- Resource Allocation: Securing the necessary tools and personnel to maintain compliance.

- Balancing Innovation and Compliance: Ensuring regulatory adherence without stifling AI innovation.

Best Practices for AI Compliance Officers

To effectively manage AI regulatory compliance, organizations should adopt the following best practices:

- Establish a Dedicated AI Compliance Framework: Develop a structured framework that integrates legal, ethical, and technical considerations.

- Leverage AI Governance Tools: Utilize automated compliance tracking and AI auditing solutions to streamline processes.

- Engage in Continuous Learning: Stay updated with industry trends and participate in regulatory consultations.

- Adopt a Risk-Based Approach: Prioritize compliance efforts based on the potential impact of AI applications.

- Foster Cross-Functional Collaboration: Work closely with legal, IT, and AI development teams to embed compliance from the ground up.

Future Outlook of AI Compliance

The role of AI compliance officers will continue to evolve as regulations become more comprehensive and enforcement mechanisms strengthen. Organizations that proactively invest in AI compliance will not only mitigate legal risks but also enhance trust with customers, investors, and regulators. Emerging technologies such as explainable AI (XAI) and AI ethics frameworks will play a pivotal role in shaping the compliance landscape. [

Navigating Global Privacy Acts: A Guide for Chief Compliance Officers and Data Protection Officers

In today’s interconnected digital world, businesses often operate across borders, serving customers and employing staff in multiple countries. This global reach brings with it the challenge of navigating a complex web of privacy regulations. For Chief Compliance Officers (CCOs) and Data Protection Officers (DPOs), understanding and adhering to these regulations

![]()

Compliance Hub WikiCompliance Hub

Conclusion

AI compliance officers are instrumental in ensuring organizations navigate the intricate landscape of AI regulations. As regulatory frameworks like the EU AI Act become more prominent, their expertise in aligning AI deployments with legal and ethical standards will be indispensable. By adopting a proactive and informed approach, organizations can harness the power of AI responsibly while maintaining regulatory compliance.